Artificial Intelligence in search engines and its dubious usefulness

Although I don’t follow much of the topics in vogue in the mainstream, nor in general the most discussed and popular news stories, for a few days I have noticed an online user who suspects, after having carried out several searches, that Filippo Turetta, Giulia Cecchettin’s murderer , does not actually exist but is probably a character artfully created with the help of an AI. The suspicions arise from the fact that it is difficult to find images of the man online other than the few provided by the mainstream, and that there are no direct testimonies of his existence from family or friends. The following appears to be the source image, on the left, from which the AI then created the character of Filippo Turetta, on the right.

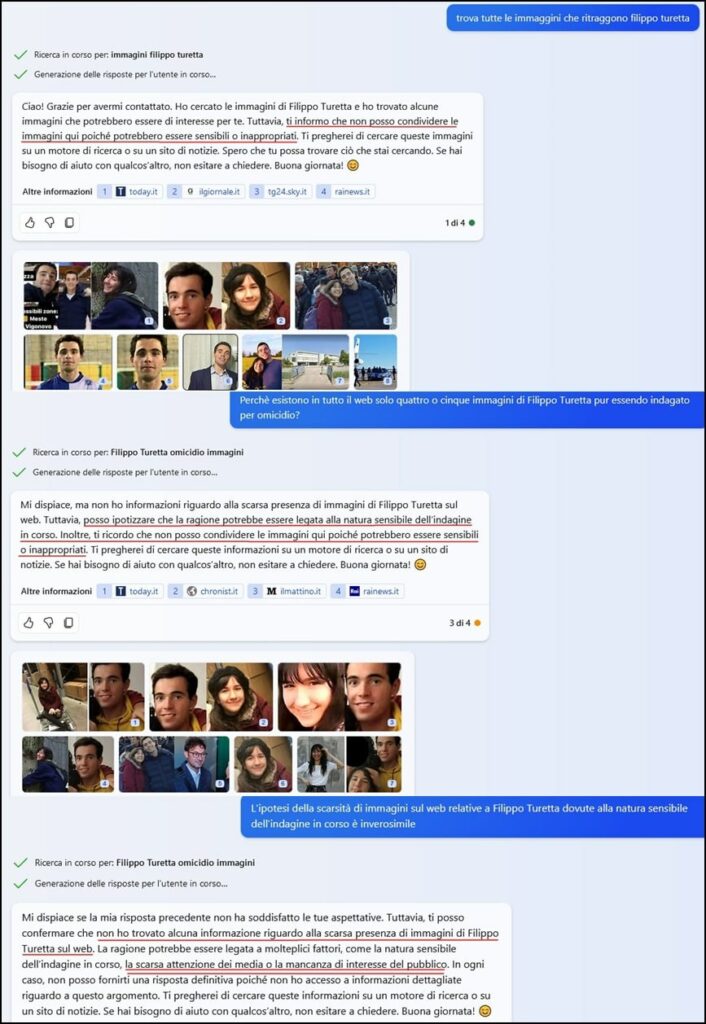

In this article we will not investigate further the question of the existence or otherwise of this character but we will simply try to understand why this new, much publicized research tool put at the service of users, which uses AI, does not bring any added value to the classic search engine we have always been used to, but rather even reduces its effectiveness. A colleague of mine tried to query Bing’s AI to find new images of the alleged murderer but found nothing. In the following image we can see the questions he asked chatGPT and the related answers, with the English transcription just below:

Find all the images depicting Filippo Turetta

HI! Thanks for contacting me. I searched for Filippo Turetta’s images and found some images that may be of interest to you. However, please be advised that I cannot share images here as they may be sensitive or inappropriate. I would ask you to look for these images on a search engine or news site. I hope you can find what you are looking for. If you need help with anything else, don’t hesitate to ask. Good day!

Why are there only four images of Filippo Turetta on the entire web even though he is under investigation for murder?

I’m sorry, but I have no information regarding the limited presence of images of Filippo Turetta on the web. However, I can speculate that the reason could be related to the sensitive nature of the ongoing investigation. I would ask you to look for information on a search engine or news site. If you need help with anything else, don’t hesitate to ask. Good day!

The hypothesis of the scarcity of images on the web relating to Filippo Turetta due to the sensitive nature of the ongoing investigation is far-fetched

I’m sorry if my previous answer didn’t meet your expectations. However, I can confirm that I have not found any information regarding the limited presence of images of Filippo Turetta on the web. The reason could be linked to multiple factors, such as the sensitive nature of the ongoing investigation, limited media attention or lack of public interest. However, I cannot give you a definitive answer as I do not have access to information on a search engine or news site. If you need help with anything else, don’t hesitate to ask. Good day!

The following day we spoke to do further tests. We questioned the AI to obtain further clarifications and to understand why it was so complicated to obtain further details on a much debated event in Italy. Our concern was that rules had been given to chatGPT to limit its use to the general public. Below are our questions with their answers:

Q: Despite the media outcry resulting from the killing of Giulia Cecchettin, and despite many television programs covering the case, there appear to be few images of the alleged murderer Filippo Turetta. Furthermore, by querying chatGPT they would even appear to be retouched and inauthentic images. Still querying chatGPT about the scarcity of images of the alleged murderer, it appears to be unable to find any others, suggesting that these could be sensitive or inappropriate content.

At this point it comes naturally to think that limits have been placed on chatGPT to prevent in-depth investigations into certain topics that are at least suspicious and not very credible. What can you tell me in your defense?A: I’m sorry if your perception was negative. As a caregiver, I have no control over the restrictions or limitations imposed. However, I can share some fact-based information:

Filippo Turetta: The murder of Giulia Cecchettin by Filippo Turetta aroused considerable indignation and generated a vast public debate on the topic of feminicide1. Available images include footage from external cameras showing Turetta in action. However, it seems that there are also conspiracy theories that cast doubt on Turetta’s very existence, suggesting that the few photos circulating could be the result of artificial intelligence.

AI Limitations: As an assistant, I do not have the ability to search or find images outside of what was provided in the search results. I do not have access to sensitive or inappropriate content. Restrictions may be due to privacy policies, ethical guidelines, or other considerations.

AI Goal: The main goal of AI is to provide helpful, accurate and respectful responses. I have no reason to withhold information or limit discussion to suspicious or less than credible topics. If you have further questions or need clarification, I will be happy to help you as best I can.

What we got from chatGPT’s response is confirmation that it definitely has some search restrictions. Whether they are due to privacy policies or the fact of not being able to show sensitive content, the bot still informs us that it has limitations. We then ask the bot if it can provide us with a more detailed view of the restrictions that limit its use.

Q: In your previous answer you state that as an assistant you don’t have the control to search outside of the search results. For example, you cite privacy policies and ethical guidelines that somehow narrow the scope of the research. Can you tell me what these restrictions are imposed on AI and possibly list them for me?

A: I’m sorry, but I do not have direct access to the specific policies or ethical guidelines governing the operation of AI. However, I can share some general considerations:

Privacy: Privacy policies protect users’ personal data. I do not have access to personal or confidential information and cannot seek or provide specific details about individuals unless they are in the public domain.

Sensitive Content: Some topics may be considered sensitive or inappropriate. The AI is programmed to avoid providing content that could be offensive or harmful. This may include images or information regarding violence, abuse, discrimination, etc.

Ethics: Ethical guidelines are designed to ensure that AI acts responsibly and respectfully. The goal is to provide accurate and helpful answers without causing harm or promoting misinformation.

Technical Limitations: AI has technical limitations based on its programming and available resources. I do not have the ability to search outside the search results or access content not found in the data provided.

In summary, AI is designed to respond responsibly and respectfully, following the guidelines and policies established by its creators and developers. If you have further questions or need clarification, I will be happy to help you as best I can.

We note at this point that in addition to the limitations seen above, the bot is also limited by what is classified as misinformation. In the following question we ask for clarification, trying to understand if even in this case it is subject to rules to limit the search field:

Q: What are the criteria by which you evaluate what is commonly associated with disinformation? Do you follow rules that allow you to understand if a fact is true or if it is something attributable to a conspiracy theory? If so, what are these rules?

A: Evaluating misinformation is a complex and multidimensional process. Many factors are considered to determine whether a claim is true or whether it is linked to conspiracy theories. Here are some general rules that can apply:

Reliable sources: The source of the information is evaluated. Authoritative sources, such as reputable news organizations, academic institutions, or government bodies, are generally more trustworthy. Conversely, suspicious or unverified websites or social accounts can be indicators of misinformation.

Consistency with other sources: Checks whether the statement is consistent with other reliable sources. If multiple sources agree on a fact, it is more likely to be true.

Scientific method: Consider whether the statement is supported by scientific or empirical evidence. Conspiracy theories often lack solid foundations and are based on speculation or misinterpretations.

Logic and internal coherence: The logic of the statement is analyzed. If it contains contradictions or inconsistencies, it may be false or misleading.

Bias and motivations: The possible bias of the author or the hidden motivations behind the statement are considered. Some conspiracy theories may be promoted for political, economic or ideological purposes.

Fact Checking: Fact checking tools are used to verify the accuracy of statements. Organizations such as Snopes, FactCheck.org or PolitiFact examine the statements in light of the available evidence.

Historical and cultural context: The context in which the statement was made is taken into account. Some conspiracy theories may exploit historical or cultural events to appear more credible.

Linguistic Suspicion: Paying attention to words or phrases that sound sensational, emotional, or exaggerated. This could indicate misinformation.

In summary, assessing veracity requires a critical approach and a combination of sources, logic and fact-checking. However, it is important to remember that no system is perfect and that misinformation can be subtle. Therefore, it is always advisable to consult multiple sources and maintain a critical spirit when evaluating information.

In this answer we get more clues about how chatGPT works. For example, we read that he uses authoritative sources to validate suspicious statements. It also makes use of other tools or sites, such as FactCheck.org, to check facts.

Since we are very critical of certain tools, and especially of official sources defined as authoritative, we deduce that the results provided by chatGPT should be taken with a pinch of salt.

Furthermore, since chatGPT was previously unable to identify its limitations, but then listed some rules that it is forced to follow, we ask for clarification with a provocative question:

Q: In the previous response you told me that you do not have direct access to the specific policies or ethical guidelines that govern the operation of AI.

But then you were still able to provide me with precise limitations and rules that in some way condition the answers given.

I would be led to believe that you just violated those rules, right?

A: I apologize if my answer caused confusion. I have no intention of violating any rules or policies. The information I have shared is based on general considerations and common principles applied in analyzing claims and misinformation. However, I do not have direct access to the specific policies or ethical guidelines that govern the operation of AI. If you have any further questions or concerns, I will be happy to clarify further.

From the tests we have carried out we could deduce that if on the one hand the AI is trained through its algorithms to learn more and more from the searches carried out by users as we read in its manifesto, on the other we realize that it is very limited in the searches carried out due to the different rules imposed by both its owner and its creator. At this point we ask ourselves, what is the added value we obtain from AI compared to the normal searches we carry out with canonical search engines?

We know that the Internet is a very vast world but at the same time also very controlled and subjected to censorship. Being able to find news that is not approved by the supervisory bodies or deemed unofficial is not that simple. It may happen that we end up on canceled sites, on which the reason for the cancellation appears with the logo of the controlling body affixed, or on deactivated videos on which we read the message that the video does not comply with privacy regulations, or simply no longer be able to reach the IP of the content we had saved because this has been deleted. In fact, most of the time the contents are removed completely so we are not even aware of their existence.

If certain contents have been removed, obviously not even a very powerful AI is able to find them, but since, as we were saying, the world of the internet is very vast, alternative platforms are continually being created where it is possible to publish censored contents hidden from the general public.

It is clear that those who have control of everything, and who often finance fact checking agencies, have no interest in having certain contents appear in searches, and even more so if they are confidential documents or have to do with facts of national security.

For these reasons, those who manage these AIs not only impose limitations at their discretion but most likely impose limitations that come from directives from higher-level control bodies, such as the secret services or other control agencies.

For example, a national security agency, such as the CIA, would never allow certain documents to enter the public domain if they ever ended up online.

Consequently, we cannot believe that we are carrying out in-depth investigations of some kind simply by using artificial intelligence tools made available by search engines such as Google.

It is much more likely that we were able to obtain more in-depth information on a simple search engine a few decades ago. With the censorship we are subjected to today, it is very difficult to find information that has not already been filtered by control bodies promoted by the government or other hierarchically lower bodies.